Rust's Perf Survey'25, AI eating code, Apache Wayang, and Data Engineering's Wall Street Moment

When performance matters more than preferences: the evolving reality of data engineering work with AI.

🚀 Rust's Build Performance Reality Check: 3,700 Developers Weigh In

The Rust community just dropped some serious insights about compile times, and spoiler alert: it's complicated. The 2025 Compiler Performance Survey pulled in over 3,700 responses with an average satisfaction rating of 6/10.

Incremental rebuilds are the biggest pain point - 55% of developers wait more than 10 seconds for rebuilds (ouch!)

Workspace dependencies create unnecessary cascade rebuilds - change one crate, recompile everything downstream

Linking is always from scratch - no incremental magic here, but LLD linker adoption is coming to help

The fascinating part? Build experience varies wildly across workflows. Some developers love Rust's performance compared to C++, while others point enviously at Go's speed. What's clear is that optimizing for different workflows requires completely different solutions (which explains why this is so hard to solve universally).

⚡ From 8 Days to 90 Minutes: The 75GB CSV Streaming Success Story

A data engineer just shared their journey of ingesting a massive 75GB CSV into SQL Server without melting their laptop.

Java's InputStream + BufferedReader combo proved to be the hero - streaming without loading everything into memory

Batching and parallel ingestion techniques cut processing time from 8 days to 90 minutes per file

Tool selection matters more than you think - sometimes the "data science" tools aren't the right hammer for every nail

This reinforces something we see repeatedly: when dealing with truly large datasets, stepping outside your comfort zone language-wise can yield dramatic performance improvements (and will be fun).

🤖 "70% of My Workload is All Used by AI" - The New Data Engineering Reality

The r/dataengineering community is buzzing with a confession that hits close to home: most data engineering work is now AI-driven. This isn't just about building ML pipelines anymore.

AI systems are becoming the primary consumers of data infrastructure - not just dashboards and reports

Data engineers are evolving into AI enablement specialists whether we planned for it or not

The skills gap is real - traditional ETL knowledge needs to expand into ML ops, vector databases, and real-time inference pipelines

A good way to think about this is: if your data architecture wasn't designed with AI workloads in mind, you're probably going to need some retrofitting soon (if you haven't already started).

📈 Oracle Hits Record Highs as Wall Street Discovers Data Engineering

Oracle's stock is shattering records, and the Street is attributing it directly to the AI and data engineering boom across enterprises. This isn't just another tech stock story.

Enterprise data modernization is driving serious investment - companies are finally putting real money behind their data strategies

Data engineering roles are becoming strategic, not just operational - we're moving from "keep the lights on" to "enable the business transformation"

The talent premium is real - when your stock price depends on data capabilities, you pay up for the people who can deliver them

What's interesting here is how quickly the narrative has shifted from "data engineering is a cost center" to "data engineering is a competitive advantage" (and Wall Street has noticed).

🔄 Apache Wayang: The Federation Play for Multi-Engine Processing

Apache Wayang (still incubating) is making some bold claims about federated data processing - up to 150x faster than centralized platforms by keeping data in place.

Application independence across processing engines - write once, run on Spark, Flink, PostgreSQL, Java Streams, or whatever

In-situ processing philosophy - avoid the expensive data movement that kills performance in traditional architectures

Minimal code changes for engine switching - the holy grail of not being locked into specific processing frameworks

This feels like a response to the very real pain of vendor lock-in and the complexity of managing multiple processing engines in modern data stacks. Time will tell if the federation approach delivers on its promises, but the problem it's solving is definitely real.

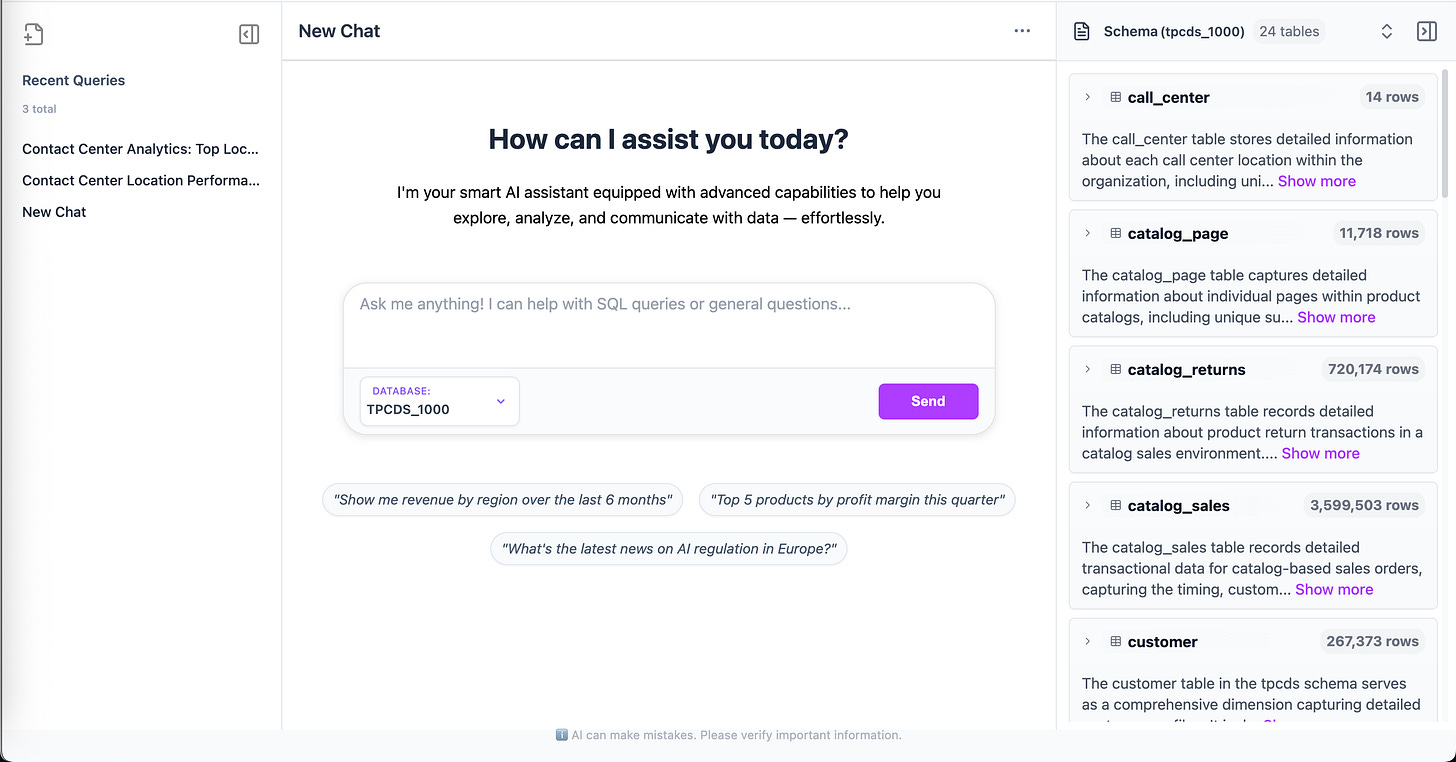

💡 Meet e6data AI Analyst (Early Access)

Ask questions exactly as you think them, get contextual multi-turn conversations, and actually enjoy the follow-up process for once. Early access here.

95%+ accuracy on enterprise workloads - because "pretty good" isn't good enough when you're dealing with 1000+ tables and complex relationships

Multi-turn conversations- make your data talk back

Zero migration required - works with your existing data platform (we know how much fun those migration projects are)

Community & Events:

The team published a new technical deep dive on Partition Projection in Data Lakehouses this week!

Also, we are hosting the next Lakehouse Days with Bengaluru Streams on data streaming, lakehouse architecture, and the future of real-time analytics this 27th September.

You'll also find us at:

Big Data London (24-25 Sep, 2025)

Databricks World Tour Mumbai (19 Sep, 2025)

AND we're hunting for data engineers who get excited about AI and aren't afraid to build the future. No corporate speak here - just cool problems and smart people to solve them with.