LLMs Stall, Queries Spill, and e6data Bridges Delta, Hudi, Iceberg & Polaris

Tactics to tame runaway memory, curb Bronze disasters, refactor tests,& AI drama. Learn how to build a modern data pipeline in Snowflake and our product updates on Iceberg, Polaris, Hudi, Delta

🛠️ OpenRewrite Beats Gemini AI at JUnit Migration

This week, our senior engineer pitted Google’s Gemini against OpenRewrite when moving tests from JUnit 4 → 5—Gemini timed-out, while OpenRewrite finished in minutes.

• Gemini burned API quota and stalled on “complex” tests

• OpenRewrite migrated nearly all tests in ~10 min; only edge cases needed tweaks

• Moral: pick the right-sized tool. LLMs aren’t a silver bullet for structured code refactors

Our Takeaway: Rule-based refactoring still trumps LLM magic for deterministic migrations. The big a-ha moment for him was, it passed all tests except tpox (known) and one geospatial test (which is because it uses assertThrows, and the expected error message is coming different)

💾 Databases Chase Unified Memory Limits

An in-depth post argues modern query engines must police themselves with strict memory caps and spill-to-disk safety nets—language choice matters.

• Two must-haves: kill a query before it OOMs, and spill gracefully when it would

• Go’s silent allocations make tracking painful; Rust’s lack of fallible allocators is “annoying” but fixable

• Teams layer custom allocators/monitors to meter both memory and CPU for every pipeline operator

Our Takeaway: Expect next-gen engines to ship with first-class resource governors, not bolt-ons.

🚦 Stopping the Bronze Data Deluge

A Reddit thread tackles medallion-layer mayhem when incremental feeds piggy-back on full loads and accidentally delete 95 % of data.

• Hash-based “delete-on-absence” logic breaks with sparse incrementals

• Community suggests adding load_type, is_current, and soft-delete flags instead of hard deletes

• Keep only 30–90 days in Bronze; archive deep history cold and manage SCD 2 in Silver

Our Takeaway: Treat Bronze as a transient landing zone and preserve history higher up and let incrementals append, not annihilate.

🧊 Delta, Iceberg & Hudi: Same Parquet, Different Vibes

Another lively debate says the three lakehouse table formats feel like Oracle, Postgres and MySQL—different logos, same spoon.

• Hudi wins for low-latency streaming upserts; Delta shines in Databricks’ managed world; Iceberg rules with vendor-neutral engine support

• All wrap Parquet files with metadata layers; feature gaps are narrowing fast

• Consensus: by 2025, Iceberg is the safest long-term bet for neutrality across Snowflake, Flink, Trino & friends

Our Takeaway: Master the concepts of partitioning, snapshots, manifest files and you can hop between formats as ecosystems blur. Btw, we launched an improved support for Delta, Iceberg, Hudi, and Delta this week.

💡 Product Launch

Product Update: Improved support for Iceberg, Polaris, Hudi & Delta Lake

Our improved support lets you natively read and browse all four open-table formats (Iceberg, Polaris, Hudi, Delta Lake) inside one e6data workspace with zero-copy ingestion, cross-catalog joins, Polaris RBAC/Unity Catalog enforcement, and a unified, metadata-aware query path.

📚 Blog Release

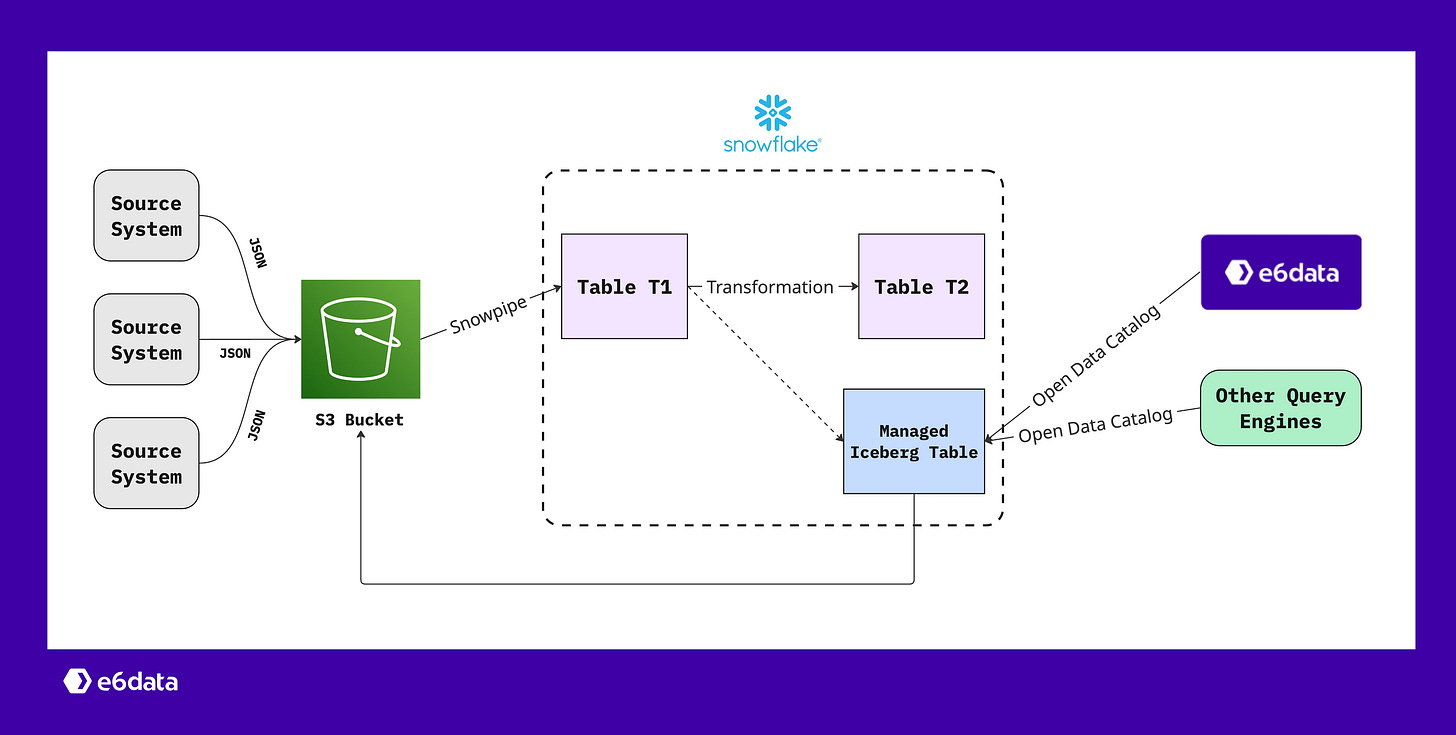

Snowflake Snowpipe → Managed Iceberg Tables (with sync checks)

Our lead engineer wrote a complete blueprint for building a modern Snowflake pipeline that ingests with Snowpipe, transforms via SQL/stored procedures, writes to Snowflake‑managed Iceberg tables, and adds row‑level sync checks—all while keeping the data open and queryable by any Iceberg-compatible engine.

Key highlights:

End‑to‑end SQL: landing/core tables,

CREATE ICEBERG TABLE, and atransform_orders()stored procedure.Snowpipe AUTO_INGEST from S3 to landing tables; continuous or scheduled loads.

Data quality & sync checks (row counts, diffs, optional checksums) between curated and Iceberg tables.

Snowflake Tasks to automate the daily run (

CRON 0 2 * * * UTC).Open catalog, multi‑engine interoperability: query the same Iceberg data from Snowflake, Spark, Trino, Presto, e6data, etc.

Benefits called out: automated ingestion, curated & governed analytics layer, and zero lock‑in via Iceberg’s open metadata

🤝 Community & Events

Lakehouse Days Replay – ICYMI, here is the full recording of our “From Stream to Lakehouse” is on e6data’s YouTube.

Building a Modern Data Pipeline in Snowflake: From Snowpipe to Managed Iceberg Tables with Sync Checks: an in-depth blog series by our engineering team

Meet Us Today at MachineCon USA – We’ll be on-site; come say hi!

Hiring: We’re growing! Check out open engineering roles [here]